What’s Overoptimization in MT5?

If you’re testing an EA (Professional Advisor) in MetaTrader 5 (MT5), you typically regulate settings like cease loss, take revenue, or indicator values to get the very best outcomes on previous knowledge. That is known as optimization.

Overoptimization occurs whenever you tweak the settings an excessive amount of simply to make the EA look excellent on historic knowledge. You are principally coaching it to “memorize the previous” as a substitute of studying the way to deal with totally different market circumstances.

Why Is Overoptimization a Drawback?

As a result of the market by no means strikes precisely the identical manner once more. So in case your EA is simply good at dealing with one particular sample from the previous, it can possible fail in actual buying and selling when circumstances change.

Consider it like this:

Think about learning for a take a look at by memorizing all of the solutions to final yr’s examination. If this yr’s questions are totally different, you are in bother.

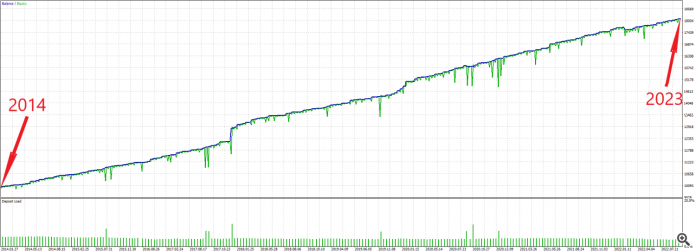

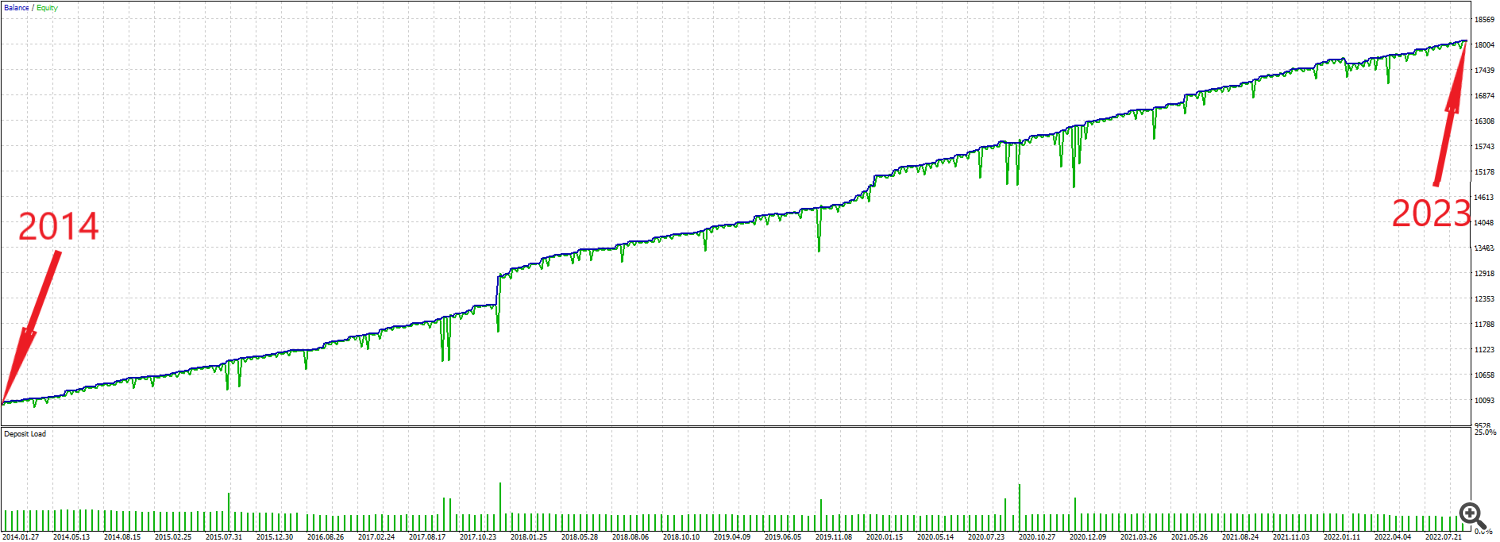

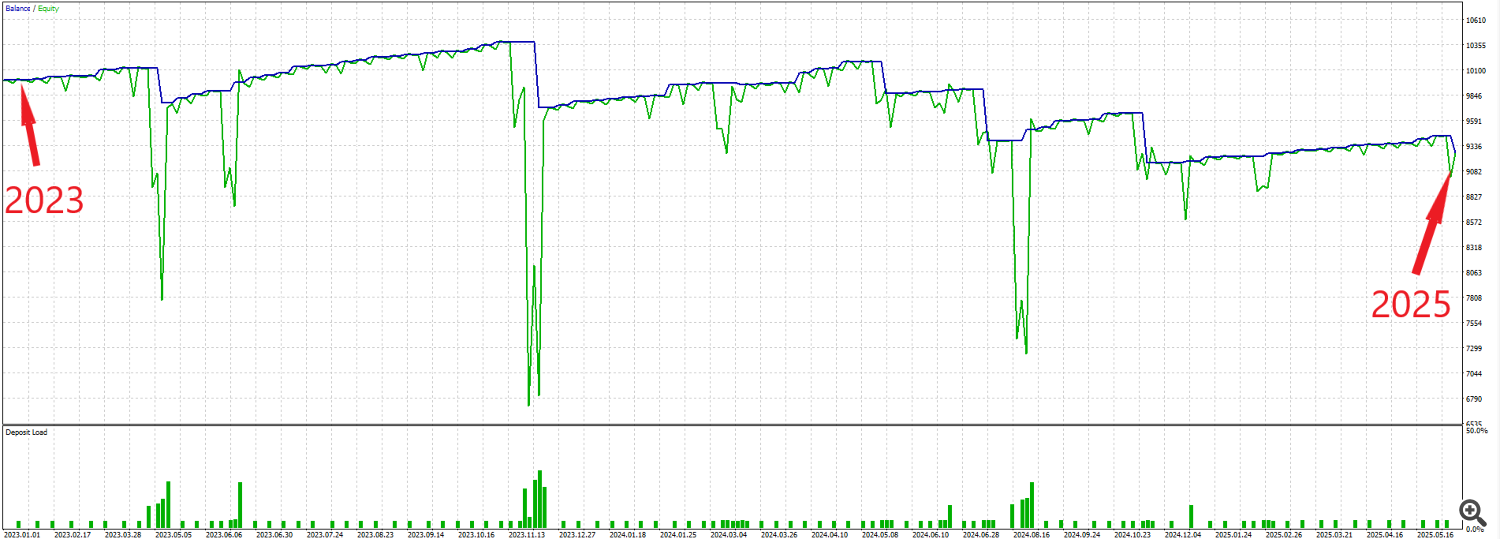

As you’ll be able to see within the instance beneath, the EA performs completely through the optimization interval from 2014 to 2023, however as quickly because it’s examined outdoors that interval, from 2023 to 2025, it fails.

Right here’s Precisely What I Did to Keep away from Overoptimization:

1. Optimization Interval:

I optimized the EA utilizing historic knowledge from 2015 to the tip of 2021.

This implies all settings, akin to cease loss, take revenue, and indicator values, had been tuned solely throughout this era.

2. Ahead Testing Interval:

I then examined the EA from January 2022 to the current with out altering or re-optimizing any settings.

This is named ahead testing or out-of-sample testing. It’s the most dependable approach to see if an EA can deal with actual market circumstances.

3. No Overfitting:

I didn’t regulate the EA to make it look excellent through the 2022 to 2025 interval.

What you’re seeing is the EA’s uncooked and untouched efficiency, utilizing the very same settings that had been optimized solely on pre-2022 knowledge.